The robot race is fueling a fight for training data

“A lot of people are scrambling to figure out what’s the next big data source,” says Pras Velagapudi, chief technology officer of Agility Robotics, which makes a humanoid robot that operates in warehouses for customers including Amazon. The answers to Velagapudi’s question will help define what tomorrow’s machines will excel at, and what roles they may fill in our homes and workplaces.

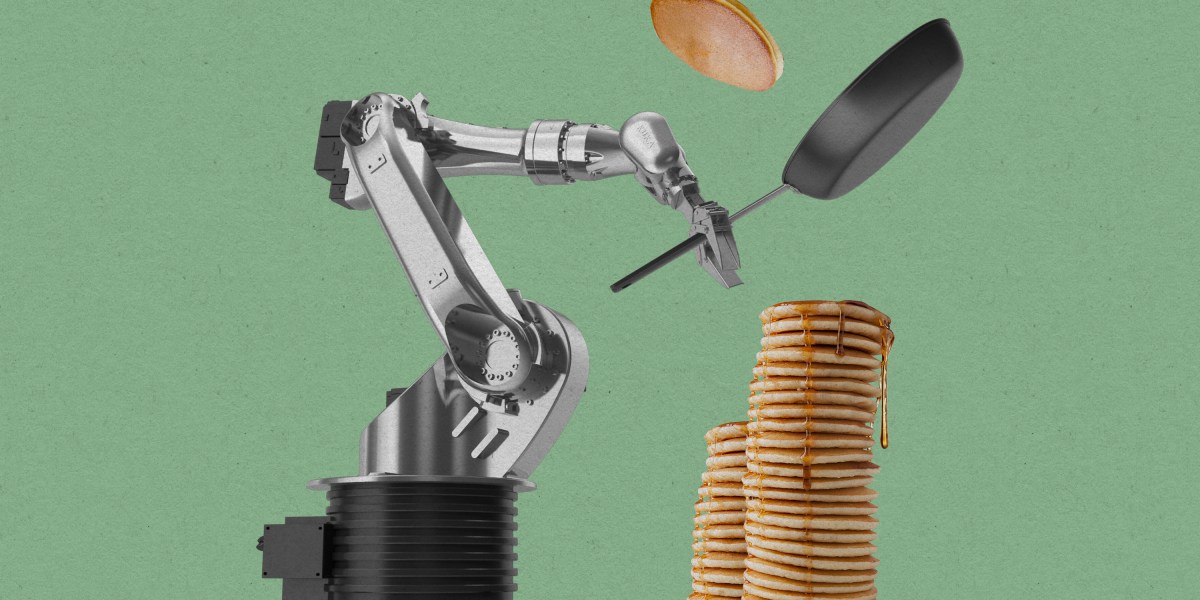

Prime training data

To understand how roboticists are shopping for data, picture a butcher shop. There are prime, expensive cuts ready to be cooked. There are the humble, everyday staples. And then there’s the case of trimmings and off-cuts lurking in the back, requiring a creative chef to make them into something delicious. They’re all usable, but they’re not all equal.

For a taste of what prime data looks like for robots, consider the methods adopted by the Toyota Research Institute (TRI). Amid a sprawling laboratory in Cambridge, Massachusetts, equipped with robotic arms, computers, and a random assortment of everyday objects like dustpans and egg whisks, researchers teach robots new tasks through teleoperation, creating what’s called demonstration data. A human might use a robotic arm to flip a pancake 300 times in an afternoon, for example.

The model processes that data overnight, and then often the robot can perform the task autonomously the next morning, TRI says. Since the demonstrations show many iterations of the same task, teleoperation creates rich, precisely labeled data that helps robots perform well in new tasks.

The trouble is, creating such data takes ages, and it’s also limited by the number of expensive robots you can afford. To create quality training data more cheaply and efficiently, Shuran Song, head of the Robotics and Embodied AI Lab at Stanford University, designed a device that can more nimbly be used with your hands, and built at a fraction of the cost. Essentially a lightweight plastic gripper, it can collect data while you use it for everyday activities like cracking an egg or setting the table. The data can then be used to train robots to mimic those tasks. Using simpler devices like this could fast-track the data collection process.

Open-source efforts

Roboticists have recently alighted upon another method for getting more teleoperation data: sharing what they’ve collected with each other, thus saving them the laborious process of creating data sets alone.

The Distributed Robot Interaction Dataset (DROID), published last month, was created by researchers at 13 institutions, including companies like Google DeepMind and top universities like Stanford and Carnegie Mellon. It contains 350 hours of data generated by humans doing tasks ranging from closing a waffle maker to cleaning up a desk. Since the data was collected using hardware that’s common in the robotics world, researchers can use it to create AI models and then test those models on equipment they already have.

The effort builds on the success of the Open X-Embodiment Collaboration, a similar project from Google DeepMind that aggregated data on 527 skills, collected from a variety of different types of hardware. The data set helped build Google DeepMind’s RT-X model, which can turn text instructions (for example, “Move the apple to the left of the soda can”) into physical movements.